An RDF abstraction for the JVM

Commons RDF is an effort from the Jena and Sesame communities to define a common library for RDF 1.1 on the JVM. In my opinion, the current proposal suffers from design issues which seriously limit interoperability despite the stated objective. In this article, I will explain the limits of the current design and discuss alternatives to address the flaws.

This article is as much about RDF on the JVM as it is about API design and abstractions in Java. No prior knowledge with RDF is required as I will introduce the RDF model itself. So you might end up learning what RDF is as a side-effect :-)

the problem RDF Commons wants to solve

For a long time now, if you wanted to do RDF (and SPARQL) stuff in Java, you basically had the choice between Jena and Sesame. Those two libraries were developed independently and didn’t share much, despite the fact that they are both implementations of well-defined Web standards.

So people have come up with ways to go back-and-forth between those two worlds: object adapters, meta APIs, ad-hoc APIs, etc. For example, let’s say you wanted to use that awesome asynchronous parser library for Turtle. It returns a Jena graph while your stack is mainly Sesame? Well it’s too bad for you. So you use an adapter which wraps every single objects composing your graph.

So let’s say you have the opportunity to solve those problems. What would you do? If you have done software development for a while, especially if it was in Java, your first thought might be about defining a common class hierarchy coupled with an abstract factory. Then you could go back to the author of the Turtle library with a Pull Request using the new common interfaces, and everybody is happy, right?

Let’s see how this pans out in the case of Commons RDF 0.1.

Commons RDF

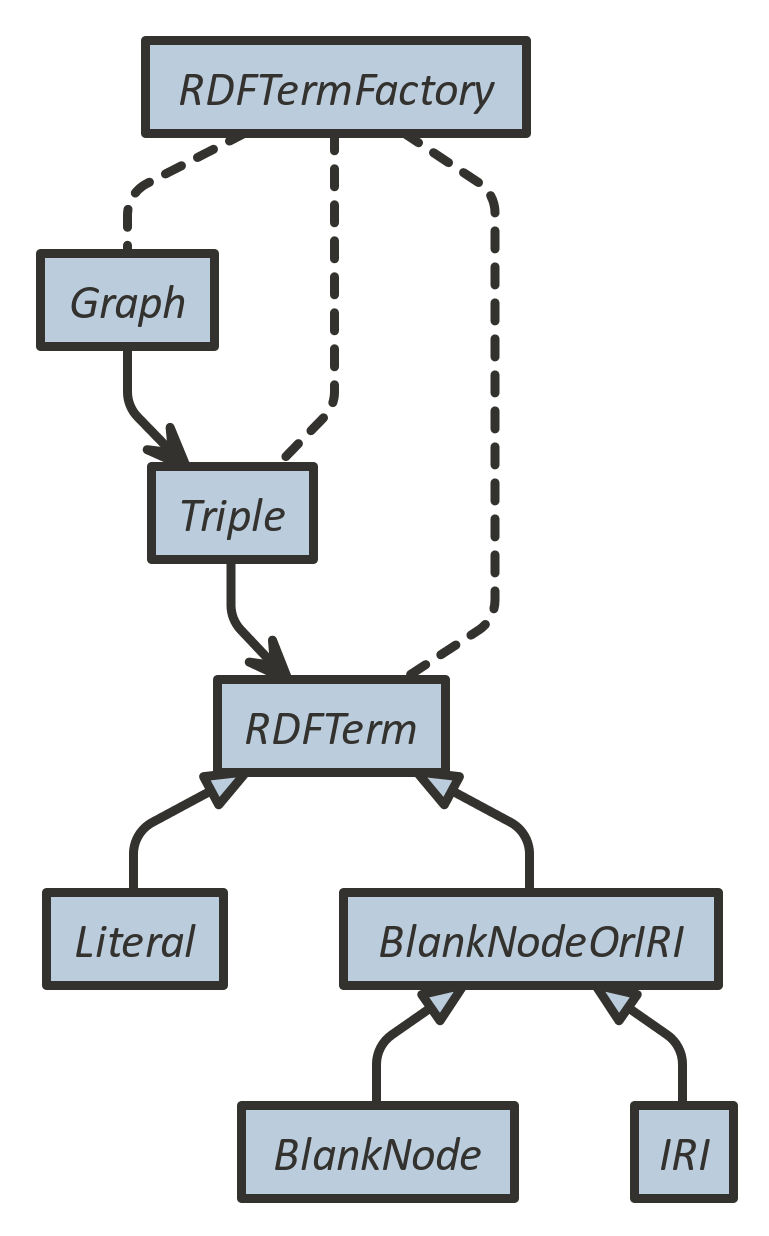

Commons RDF closely follows the concepts defined in RDF 1.1, including the terms used. It specifically targets plain RDF (as opposed to Generalized RDF) and wants to be type-safe as much as possible e.g. only IRIs and blank nodes are accepted in the subject position for a triple.

Here is a overview of the design of Commons RDF:

link to original image

-

each RDF concept is mapped onto a Java interface:

Graph,Triple,RDFTerm,IRI,BlankNode,Literal -

there is an additional concept:

BlankNodeOrIRI -

there are sub-type relationships between

RDFTerm,BlankNodeOrIRI,IRI,BlankNode, andLiteral -

the interfaces expose methods to access their components

-

the factory

RDFTermFactoryknows how to create concrete instances of the interfaces

Here is a quick look at what RDF actually is in the Commons RDF world (this is basically copied from the source code):

package org.apache.commons.rdf.api;

public interface Graph {

void add(Triple triple);

boolean contains(Triple triple);

Stream<? extends Triple> getTriples();

...

}

public interface Triple {

BlankNodeOrIRI getSubject();

IRI getPredicate();

RDFTerm getObject();

}

public interface RDFTerm {

String ntriplesString();

}

public interface BlankNodeOrIRI extends RDFTerm { }

public interface IRI extends BlankNodeOrIRI {

String getIRIString();

}

public interface BlankNode extends BlankNodeOrIRI {

String uniqueReference();

}

public interface Literal extends RDFTerm {

String getLexicalForm();

IRI getDatatype();

Optional<String> getLanguageTag();

}

public interface RDFTermFactory {

default Graph createGraph() throws UnsupportedOperationException { ... }

default IRI createIRI(String iri)

throws IllegalArgumentException, UnsupportedOperationException { ... }

/** The returned blank node MUST NOT be equal to any existing */

default BlankNode createBlankNode()

throws UnsupportedOperationException { ... }

/** All `BlankNode`s created with the given `name` MUST be equivalent */

default BlankNode createBlankNode(String name)

throws UnsupportedOperationException { ... }

default Literal createLiteral(String lexicalForm)

throws IllegalArgumentException, UnsupportedOperationException { ... }

default Literal createLiteral(String lexicalForm, IRI dataType)

throws IllegalArgumentException, UnsupportedOperationException { ... }

default Literal createLiteral(String lexicalForm, String languageTag)

throws IllegalArgumentException, UnsupportedOperationException { ... }

default Triple createTriple(BlankNodeOrIRI subject, IRI predicate, RDFTerm object)

throws IllegalArgumentException, UnsupportedOperationException { ... }

}

Everything looks actually good and pretty standard, right? So you might be wondering why I am not that thrilled by this approach. Keep on reading then :-)

class-based design

As a reminder, in most static languages, types are only a compile time information. In Java, classes and interfaces in Java are just a reified version of types (up to generics which get erased by the JVM), meaning that they are an (incomplete by design) abstraction for types that can be manipulated at runtime.

RDF Commons decided to model the RDF types using interfaces. In Java, interfaces and classes rely on what we call nominal subtyping. It means that a concrete implementation is required to explicitly extend (or implement) an interface to be considered a subtype.

In other words, despite java.lang.UUID being a perfectly acceptable candidate for being a BlankNode, it is impossible to use it directly because UUID does not implement BlankNode, so UUID has to be wrapped. There are actually many other cases like that: java.net.URI or akka.http.model.Uri are acceptable candidates for IRI, java.lang.String or java.lang.Integer for Literal, etc.

So here is my first and main complaint about Commons RDF: it forces implementers to coerce their types into its own class hierarchy and there is no good reason for doing so.

generics

How can be define abstract types and operations on them without relying on class/interface inheritance? You already know the answer, as it is the same story than with java.util.Comparator<T> and java.lang.Comparable<T>.

Let’s see what the factory would look like with this approach:

public interface RDFTermFactory<Graph,

Triple,

RDFTerm,

BlankNodeOrIRI extends RDFTerm,

IRI extends BlankNodeOrIRI,

BlankNode extends BlankNodeOrIRI,

Literal extends RDFTerm> {

/* same factory functions as before go here */

}

Instead of referring to Java interfaces, we now refer to the new introduced generics. In a way, generics are more abstract than interfaces. Also, generics let you express the subtype relationship using extends.

As you have probably already noticed, that only gives us a way to create inhabitants for those types. We also need a way to access their components.

RDF module

Accessing components was the role of the methods defined on the interfaces. So we just have to move them into the factory and make them functions instead. And because the factory is now made of all the operations actually defining the RDF model, we can refer to it as the RDF module.

public interface RDF<Graph,

Triple,

RDFTerm,

BlankNodeOrIRI extends RDFTerm,

IRI extends BlankNodeOrIRI,

BlankNode extends BlankNodeOrIRI,

Literal extends RDFTerm> {

// from org.apache.commons.rdf.api.RDFTermFactory

BlankNode createBlankNode();

BlankNode createBlankNode(String name);

Graph createGraph();

IRI createIRI(String iri) throws IllegalArgumentException;

Literal createLiteral(String lexicalForm) throws IllegalArgumentException;

Literal createLiteral(String lexicalForm, IRI dataType) throws IllegalArgumentException;

Literal createLiteral(String lexicalForm, String languageTag) throws IllegalArgumentException;

Triple createTriple(BlankNodeOrIRI subject, IRI predicate, RDFTerm object) throws IllegalArgumentException;

// from org.apache.commons.rdf.api.Graph

Graph add(Graph graph, BlankNodeOrIRI subject, IRI predicate, RDFTerm object);

Graph add(Graph graph, Triple triple);

Graph remove(Graph graph, BlankNodeOrIRI subject, IRI predicate, RDFTerm object);

boolean contains(Graph graph, BlankNodeOrIRI subject, IRI predicate, RDFTerm object);

Stream<? extends Triple> getTriplesAsStream(Graph graph);

Iterable<Triple> getTriplesAsIterable(Graph graph, BlankNodeOrIRI subject, IRI predicate, RDFTerm object);

long size(Graph graph);

// from org.apache.commons.rdf.api.Triple

BlankNodeOrIRI getSubject(Triple triple);

IRI getPredicate(Triple triple);

RDFTerm getObject(Triple triple);

// from org.apache.commons.rdf.api.RDFTerm

<T> T visit(RDFTerm t,

Function<IRI, T> fIRI,

Function<BlankNode, T> fBNode,

Function<Literal, T> fLiteral);

// from org.apache.commons.rdf.api.IRI

String getIRIString(IRI iri);

// from org.apache.commons.rdf.api.BlankNode

String uniqueReference(BlankNode bnode);

// from org.apache.commons.rdf.api.Literal

IRI getDatatype(Literal literal);

Optional<String> getLanguageTag(Literal literal);

String getLexicalForm(Literal literal);

}

We are doing exactly the same thing as Python does with self: class methods are just functions where the first argument used to be the receiver (aka the object) of the methods.

For the sake of brevity, I am actually showing you the final result for the RDF module. Let’s discuss the other issues that were fixed at the same time.

visitor

In Commons RDF 0.1, an RDFTerm is either an IRI or a BlankNode or a Literal. It is not clear to me how a user can dispatch a function over an RDFTerm based on its actual nature.

My best guess is that one is expected to use instanceof to discriminate between the possible interfaces. In practice, this cannot really work. As a counter-example, consider this implementation of RDFTerm which relies on the N-Triples encoding of the term:

public class NTriplesBased implements RDFTerm, IRI, BlankNode, Literal {

private String ntriplesRepresentation;

...

}

So how does one visit a class-hierarchy in Java? By using the Gang of Four’s Visitor Pattern of course! Ah ah, just kidding. It’s 2015, we can now have a stateless and polymorphic version of the visitor pattern. Actually, we can do even better using Java 8’s lambdas.

The RDF#visit function defined above in the RDF module is a visitor on steroids:

<T> T visit(RDFTerm t,

Function<IRI, T> fIRI,

Function<BlankNode, T> fBNode,

Function<Literal, T> fLiteral);

The contract for RDF#visit is pretty simple: dispatch the right function – fIRI or fBNode or fLiteral – by case, depending on what the RDFTerm t actually is. Note that the function itself is parameterized on the return type, so that any computation can be defined. And finally, as explained before, the element part of the visitor – the RDFTerm itself – has become the first argument of the function, instead of being the receiver of a method.

Finally, here is what it looks like on the user site:

RDFTerm term = ???;

String someString = rdf.visit(term,

iri -> rdf.getIRIString(iri),

bnode -> rdf.uniqueReference(bnode),

literal -> rdf.getLexicalForm(literal));

downcasting

RDFTermFactory follows the Abstract Factory pattern which is in practice very limited. Pretty often, seeing the generic interface is just not enough and people end up downcasting anyway because other functionalities may need to be exposed from the sub-types.

In my opinion, this is a big issue in something Commons RDF and we can do better. In fact, it comes for free in the RDF module defined above, as the user sees the types that were actually bound to the generics.

immutable graph

If you look at Graph#add(Triple) you’ll see that it returns void: graphs in Commons RDF 0.1 have to be mutated in place and there is no way around it. This is wrong but do not expect me to use this post for making the case for alowing immutable graphs: it’s 2015 and I should not have to do that.

Especially when the fix is very simple: just make add return a new Graph. That’s actually what Graph RDF#add(Graph,Triple) does.

Note that with this approach, one can still manipulate mutable graphs. It’s just that code using RDF#add should always use the returned Graph, even if it happens to have been mutated in place.

stateless blank node generator

This is how one can create new blank nodes in Commons RDF 0.1 (full javadoc here):

/** The returned blank node MUST NOT be equal to any existing */

default BlankNode createBlankNode()

throws UnsupportedOperationException { ... }

/** All `BlankNode`s created with the given `name` MUST be equivalent */

default BlankNode createBlankNode(String name)

throws UnsupportedOperationException { ... }

The contract on the second createBlankNode is problematic as a map from names to previously allocated BlankNodes has to be maintained somewhere. Of course, I am ruling out strategies relying on hashes e.g. UUID#nameUUIDFromBytes, because the BlankNodes would no longer be scoped and two different blank nodes _:b1 from two different Turtle documents would return the “equivalent BlankNode”. So that means that RDFTermFactory is not stateless and whether the state is within the factory or in a shared state is not relevant.

I believe that this is outside of the RDF model and that it has no place in the framework. The mapping from name to BlankNode can always be maintained on the user site, using the strategy that fits the best. Still you can see that I defined BlankNode RDF#createBlankNode(String). It’s because I think another contract can be useful here, where a String can be passed as a hint to be retrieved later e.g. when using RDF#uniqueReference. But it’s only a hint, it has no impact on the model itself.

UnsupportedOperationException

I just do not understand the value in specifying methods that can throw a UnsupportedOperationException in the context of Commons RDF. I mean, how am I expected to recover from such an exception? Does it make sense to allow for partial implementation?

Until I see a good use case for that, I have simply removed those exceptions declarations from the functions defined in the RDF module.

user side

Finally, let’s see how a library user could define a parser/serializer using the RDF module:

public class WeirdTurtle<Graph, Triple, RDFTerm, BlankNodeOrIRI extends RDFTerm, IRI extends BlankNodeOrIRI, BlankNode extends BlankNodeOrIRI, Literal extends RDFTerm> {

private RDF<Graph, Triple, RDFTerm, BlankNodeOrIRI, IRI, BlankNode, Literal> rdf;

WeirdTurtle(RDF<Graph, Triple, RDFTerm, BlankNodeOrIRI, IRI, BlankNode, Literal> rdf) {

this.rdf = rdf;

}

/* a very silly parser */

public Graph parse(String input) {

Triple triple =

rdf.createTriple(rdf.createIRI("http://example.com/Alice"),

rdf.createIRI("http://example.com/name"),

rdf.createLiteral("Alice"));

Graph graph = rdf.createGraph();

return rdf.add(graph, triple);

}

/* a very silly serializer */

public String serialize(Graph graph) {

Triple triple = rdf.getTriplesAsIterable(graph, null, null, null).iterator().next();

RDFTerm o = rdf.getObject(triple);

return rdf.visit(o,

iri -> rdf.getIRIString(iri),

bn -> rdf.uniqueReference(bn),

lit -> rdf.getLexicalForm(lit));

}

}

summary

Please allow me to be harsh: I believe that Commons RDF is mostly useless in its current form as it suffers from the many flaws I have described in this article.

As you can expect, I have already shared those concerns on the Commons RDF mailing list but I was told that it would be much more valuable to see a patch about your proposal than a quick hack from scratch. Sadly this is no “quick hack” and there is no small patch.

The good news is that the approach described here works with any RDF implementation on the JVM, including Jena, Sesame, or banana-rdf. And more importantly, it works today!

So if you are interested in a classless – but still classy – and immutable-friendly RDF abstraction for the JVM, I invite you to get in touch with me so that we can define that abstraction together.